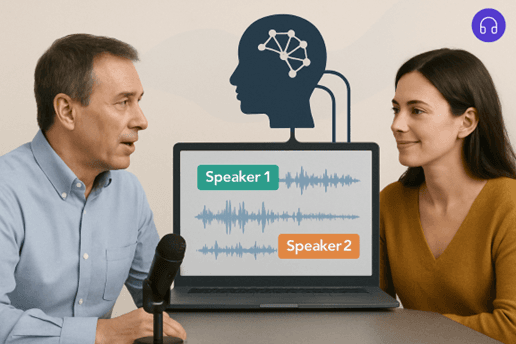

How to Separate Speakers in Audio Recordings Using AI: The Game-Changer for Content Creators

You've just finished recording a killer interview with three industry experts. The conversation was great. Then you sit down to edit, and reality hits hard — the audio is a tangled mess.

#speaker-separation#audio-editing#podcasting#ai-technology

Related Articles

Features

11 min read

AudioPod's Advanced Stem Separation: Extract Up to 16 Stems with AI

Go beyond basic stem splitters with 16 stem separation. AudioPod's advanced stem splitter and vocal remover extracts up to 16 individual stems - isolate drums from song, extract bass from music, and more. The ultimate demucs, lalal.ai and moises alternative with AI music separation.

Read article

Features

7 min read

How to Clean Up Client Calls and Team Meetings for Content and Training: Your Complete Guide

Transform messy meeting recordings into polished training materials. Learn how AI-powered tools can remove background noise, separate speakers, and create professional content in minutes.

Read article

Features

6 min read

How Podcasters Can Save Time with AI-Powered Tools: The Creator's Guide to Automated Production

Your podcast recording just ended at 2 AM. You recorded three hours of great conversation. Now comes the hard part. This used to take entire weekends. But now it can happen in under an hour.

Read article